In January, global media broke the news of a French woman named Anne who was scammed out of over $800,000 after believing she was in an online relationship with Brad Pitt. The scammer used fake profiles, WhatsApp accounts, and even a fake passport to build the illusion. But, Pitt has no social media presence. All that was possible because of AI-generated pictures of the actor.

This case is just one example of how digital deception has reshaped the online world. Once seen as a space for connection, collaboration, and open communication, the internet has increasingly become a breeding ground for manipulation and distrust.

“The cynicism we now have about interacting online is a profound shift,” said Adrian Ludwig, Chief Technology Officer at Tools for Humanity, during the Web Summit’s “Identity Crisis” panel in Doha on Tuesday.

Every day, users like Anne encounter AI-generated content without realising it.

“Trust in online identities is crumbling,” Ludwig said. “You might be scrolling through a social site or dating app, realising you don’t trust any feedback from people you don’t know personally. Those people may not even be real.”

Deepfake technology—once the domain of Hollywood special effects—is now widely available.

“There’s been over a million different free tools online that allow anybody to make a perfect deepfake without any technical experience,” explained Ben Coleman, CEO and founder of Reality Defender, a platform specialising in deepfake detection.

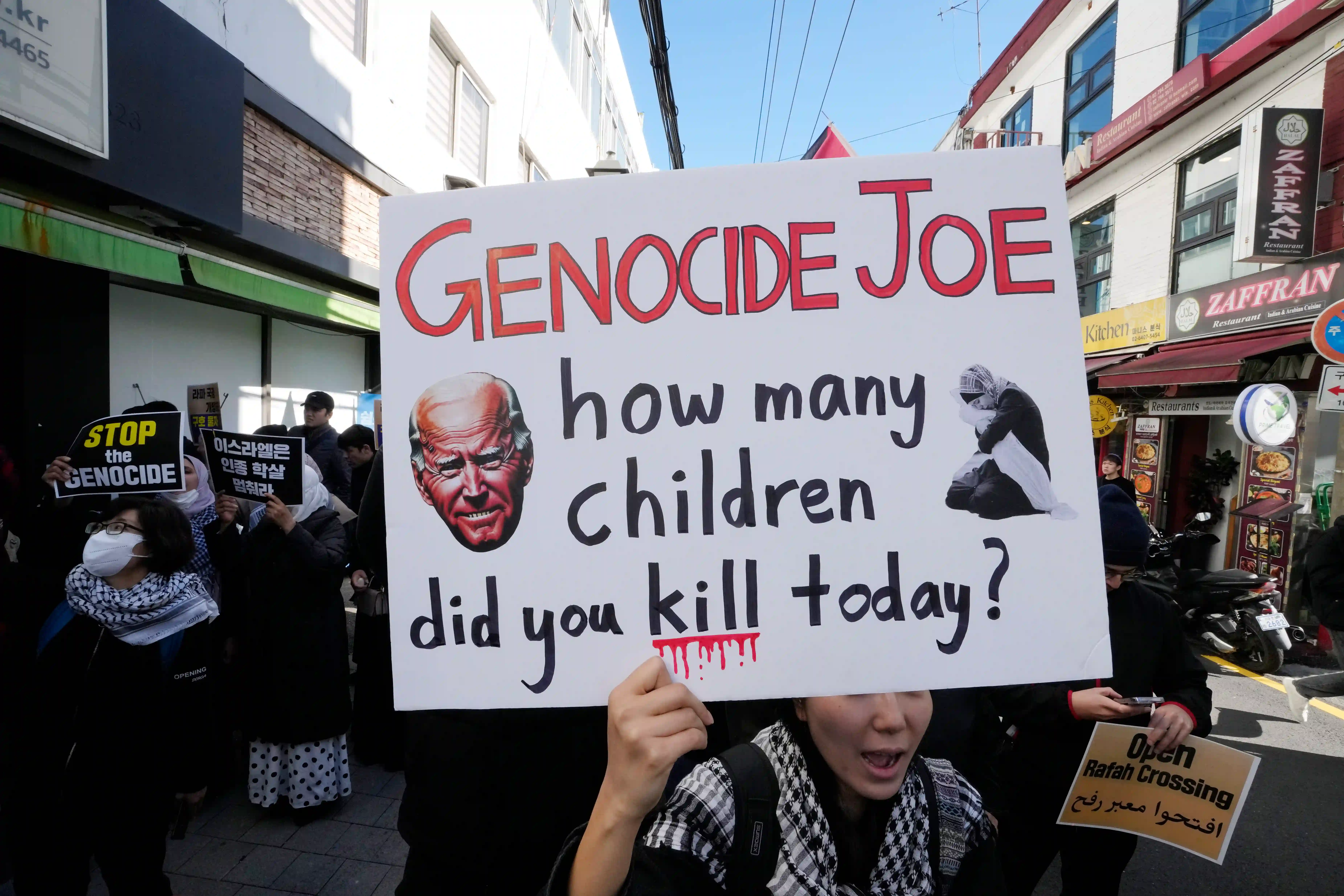

Deepfake technology, which can create hyper-realistic digital puppets, has blurred the line between fact and fiction. With few regulations, experts have long warned of its potential to fuel unrest or political scandals — warnings that are now coming true.

Crises of trust

Sinan Aral, an MIT professor and a leading researcher on misinformation, explained that deepfakes pose three primary threats—health misinformation, election interference, and corporate personal fraud.

“The consequences are huge but subtle,” Aral said, pointing to vaccine hesitancy.

“Seven million people died from COVID, and even now, 28% of the population remains unvaccinated. The CDC found that misinformation accounted for 80% of that hesitancy,” she says.

Another crucial domain that it impacts is elections.

Aral said that people’s opinions are changed by simply altering the information they are fed. Misinformation tactics focus on turnout that forms governments.

“My research shows misinformation shifts whether people show up to vote, not how they vote,” he said, citing Facebook’s past involvement in large-scale social experiments to demonstrate how easily public perception can be shaped at scale.

Fraud is another growing battleground with phishing scams, identity theft, and fraudulent transactions.

“Hundreds of millions are lost yearly to scams,” Aral said, referencing cases where grandparents receive fake, urgent messages demanding money to be transferred.

For Aral, the systemic nature of deepfake deception is what makes it dangerous. The true danger, he said, lies in the systemic nature of deepfake deception.

“You have to go from an individual deepfake to the system level,” he said. He described a scenario where AI-driven networks optimise and coordinate misinformation campaigns to target key swing states, vulnerable individuals, and high-value financial targets—all while refining their tactics through user behaviour data.

“Instead of interpreting it as a ‘random false event,’ think about a system that can coordinate and optimize millions of deepfakes.”

Vanishing line between human and machine

As AI grows to be more sophisticated, personal data has turned into a liability. “Why is having so much personal data risky?” Aral asks.

The more information available online—names, addresses, financial details—the easier it is for AI-driven scams to exploit it.

“There isn’t a root of trust right now for being a human on the internet,” Ludwig said. The challenge, he argued, is defining human identity without requiring people to reveal ever more sensitive information. “Right now, you give up more and more of your identity—where you live, your credit card info—just to verify you’re real," he adds.

Even well-known public figures have been caught in these schemes.

Aral recounted a case he personally experienced, where someone used deepfake technology to impersonate George Clooney and promote a fraudulent trading algorithm.

“It was very scary and very real,” he said.

Arms race for digital authenticity

The challenge of maintaining digital authenticity is becoming increasingly difficult, as experts describe a competition between deception and detection.

“We are in an arms race,” Aral says. “We’re facing an onslaught of rising misinformation and identity deception.”

The solution, he said, is a multi-layered defence—one that includes both privacy-preserving tools and rigorous verification systems. “We need all the tools that we can get for anonymous applications so we can trust where information is coming from,” he says, while also ensuring that AI-generated deception is exposed.

Ludwig emphasised that this is about more than online safety. “All societal advances — collaboration, coordination, communication — depend on trust,” he said. “Without it, cooperation breaks down, and the internet risks becoming a space where no one knows what’s real.”

“The battle for digital authenticity is far from over,” Ludwig said. “But through awareness, prioritisation, and the right tools, we can still ensure the internet remains a safe place.”